Genre:

AR

Platform:

Snapshot

Tools:

Lens Studio

Team:

Diana Kaiutina

Jintao Yu

Li Huang

Yu Zhang

Ziwei Niu

Project Overview

Findy Hunty offers an interactive and educational experience at Fordham Park that blends AR technology with nature. Designed to appeal to younger users and families, it guides users to explore different corners of the park, adding a sense of user interaction through gesture recognition as they learn about nature.

Despite choosing to use Snapchat as a platform, it is special in that it has different stages that require more interaction to get the experience, not just a filter.

Concept

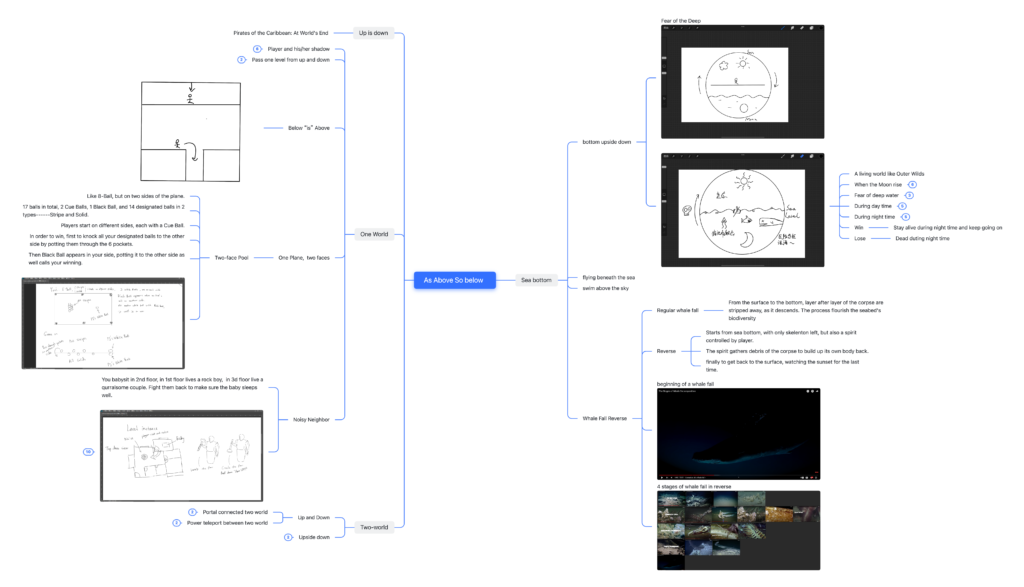

Findy Hunter is inspired by Meta Interactions like Treasure Hunter, and now similar apps like Geocaching. users move from place to place and discover items hidden by other users through a series of prompts.

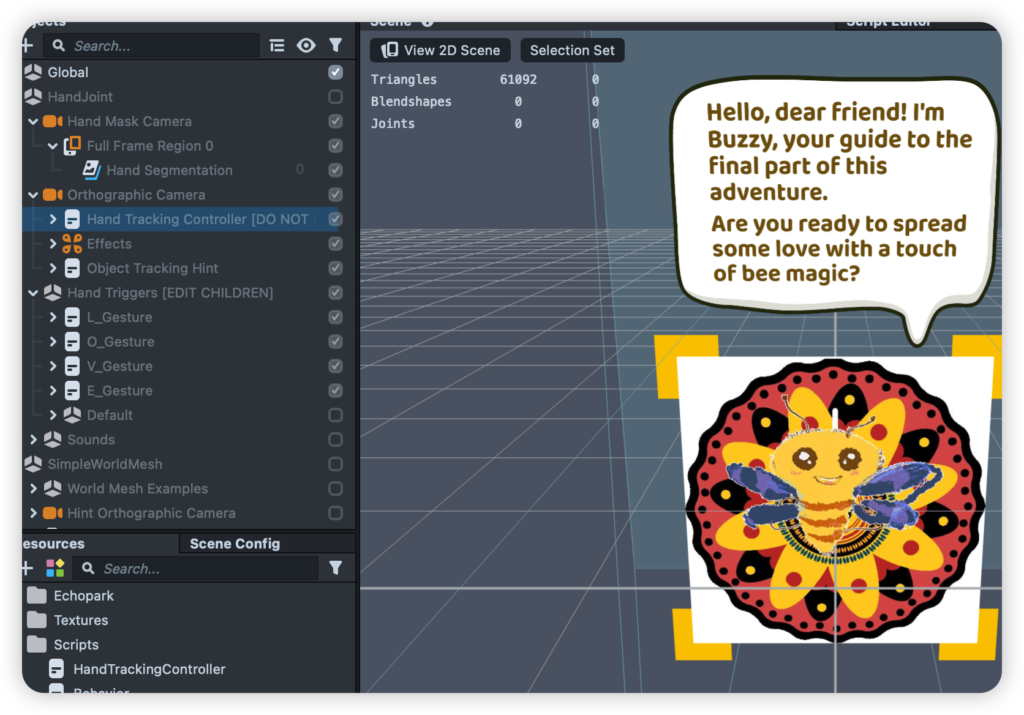

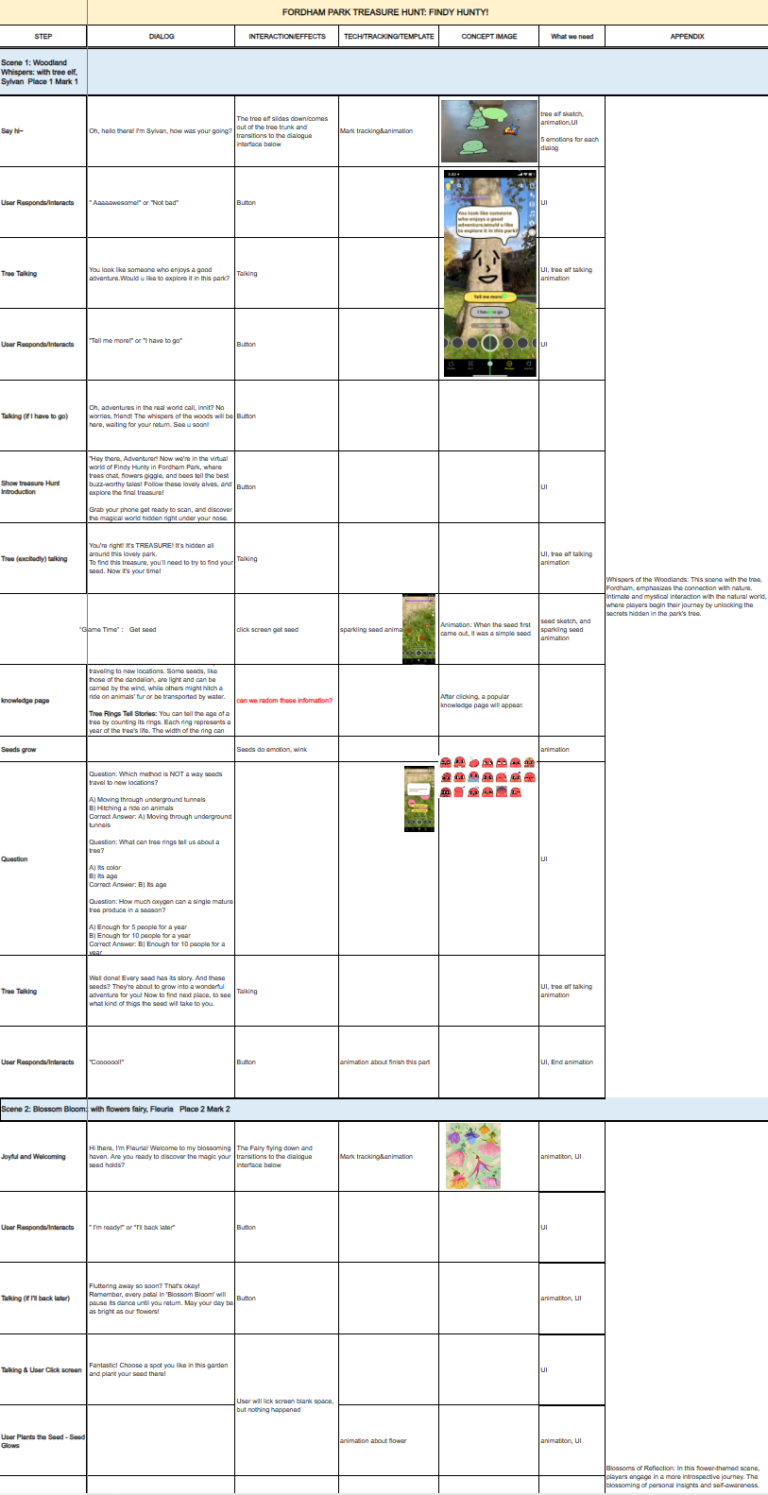

The final app utilizes AR technology to present a unique storyline in the park, including different scenes where there are different interactions, such as knowledge quizzes and gesture dialogues, each of which guides the user to the next location.

User Experience

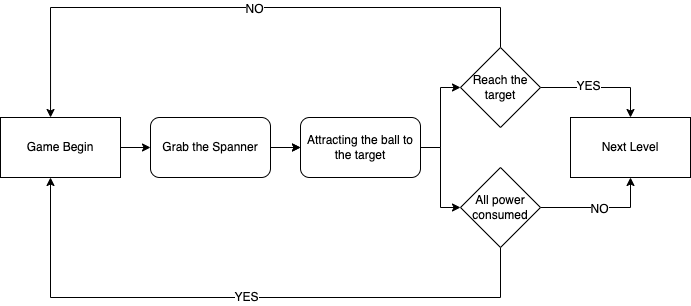

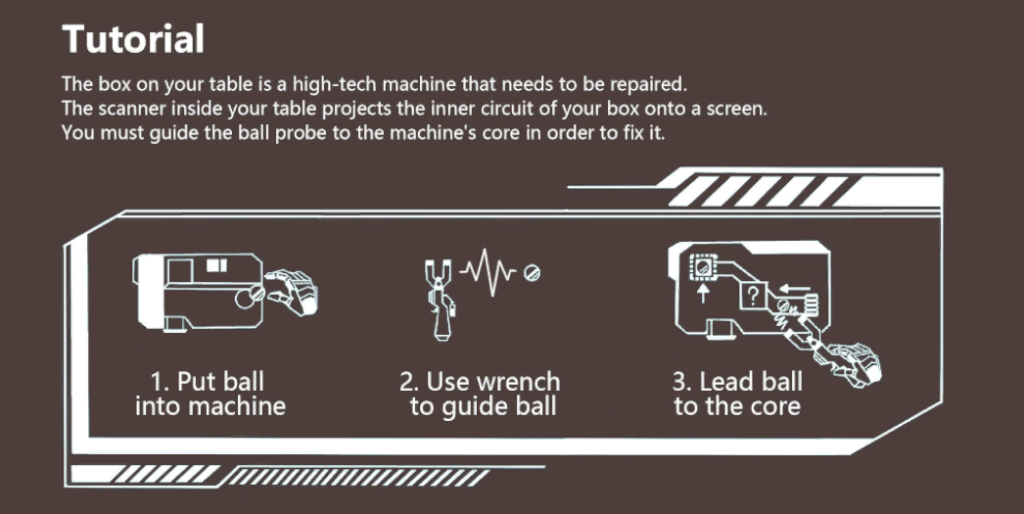

In Findy Hunty, users start their journey by finding markers in Fordham Park, helping the elves in different locations to solve their problems, learning about nature while advancing the story through fun interactions. Since the target audience is children and families, the journey focuses on the user’s activities in real space and there is no consequence for failure.

Personal Contribution

I have a background in game design and production, so I took on the role of engineer on the project.

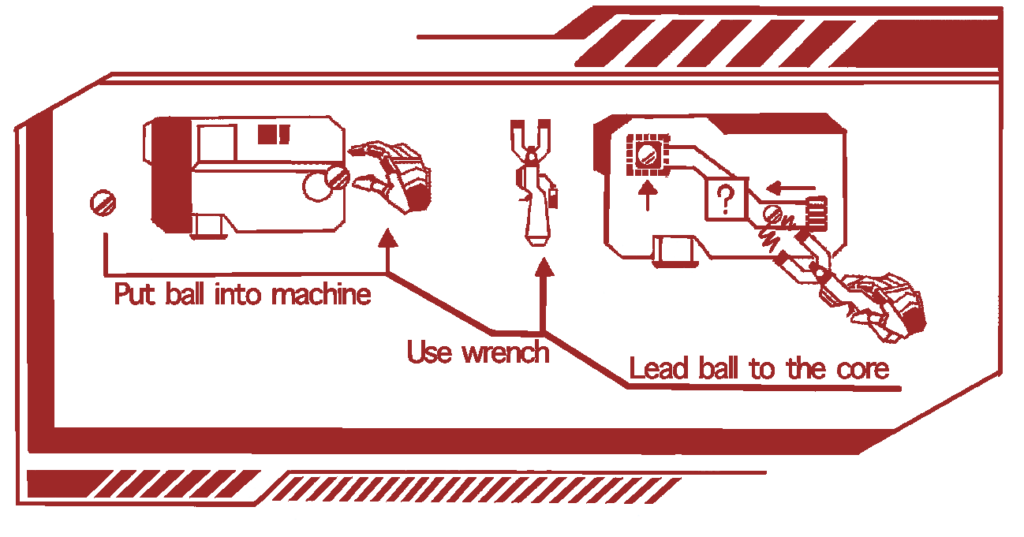

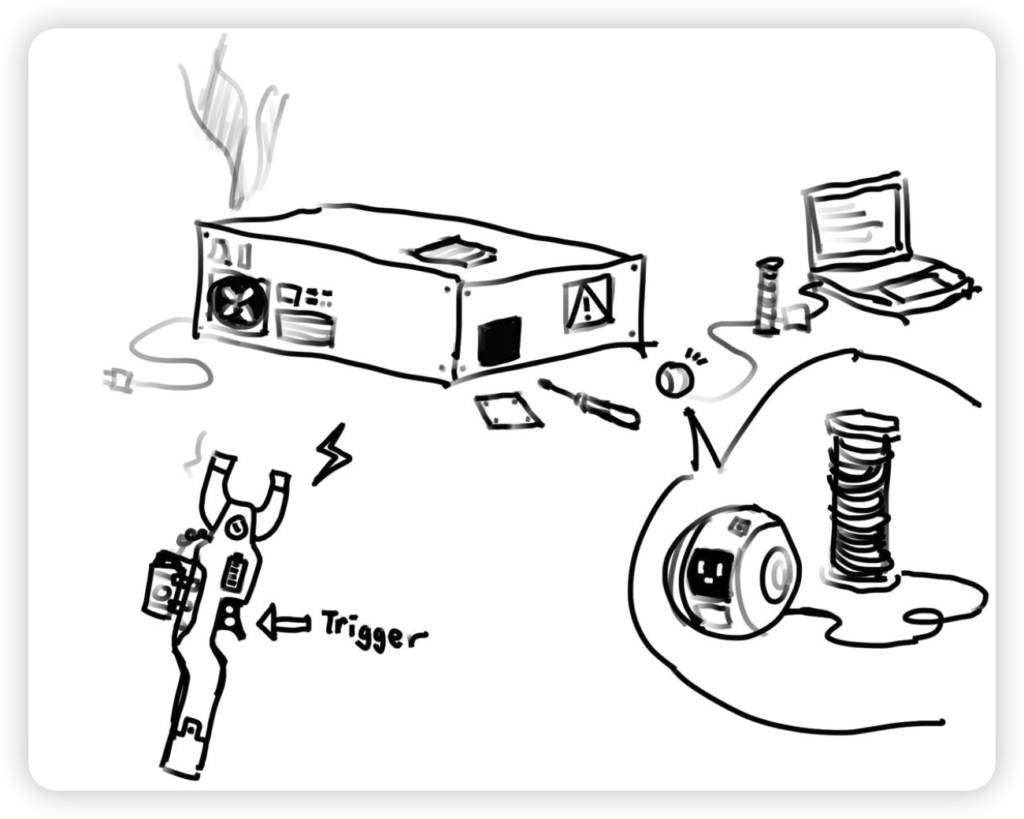

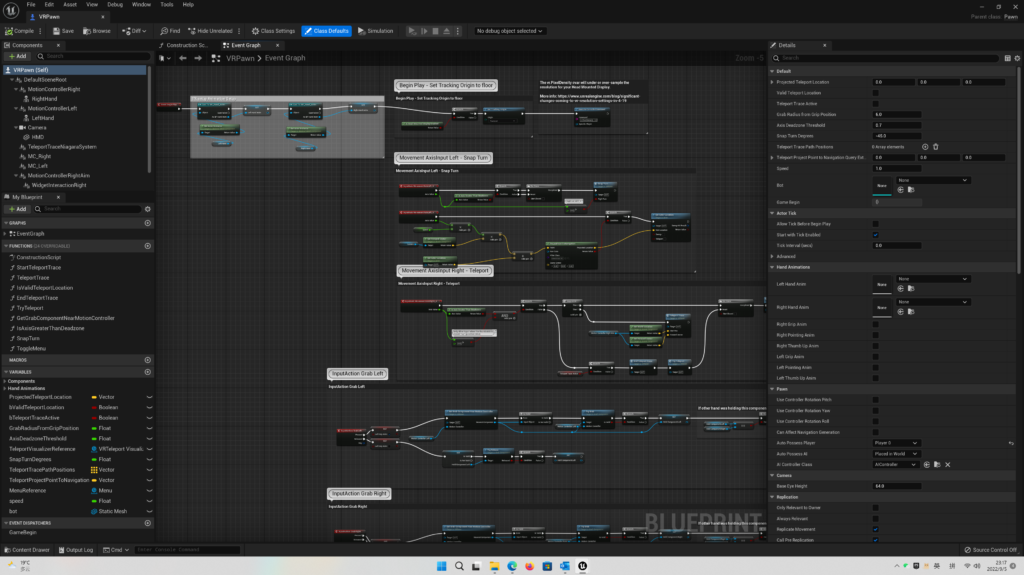

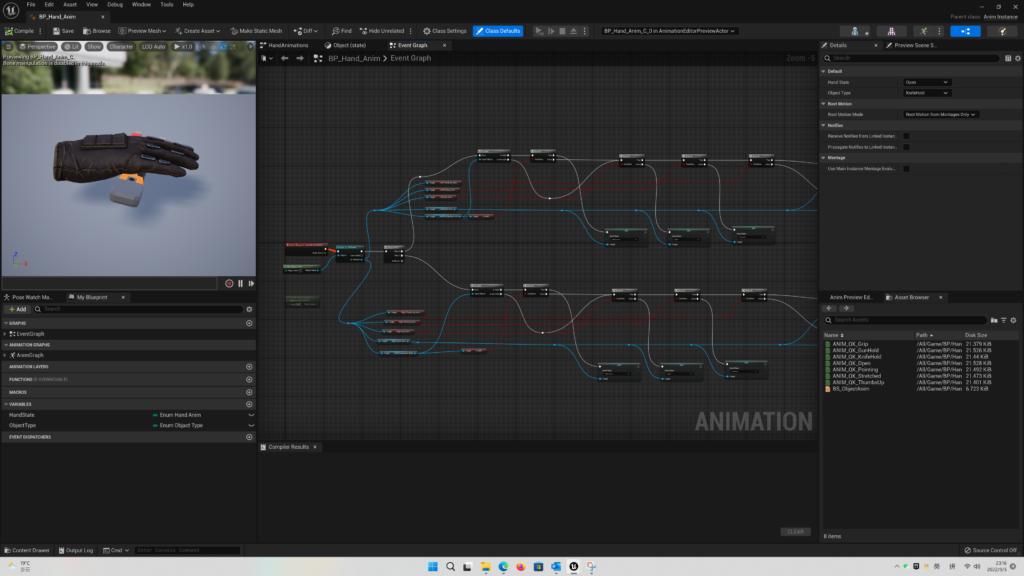

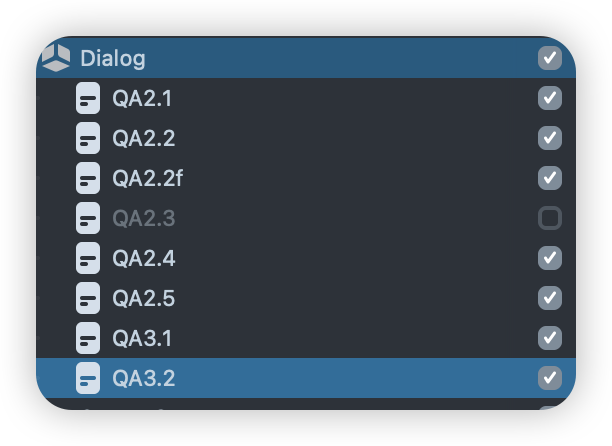

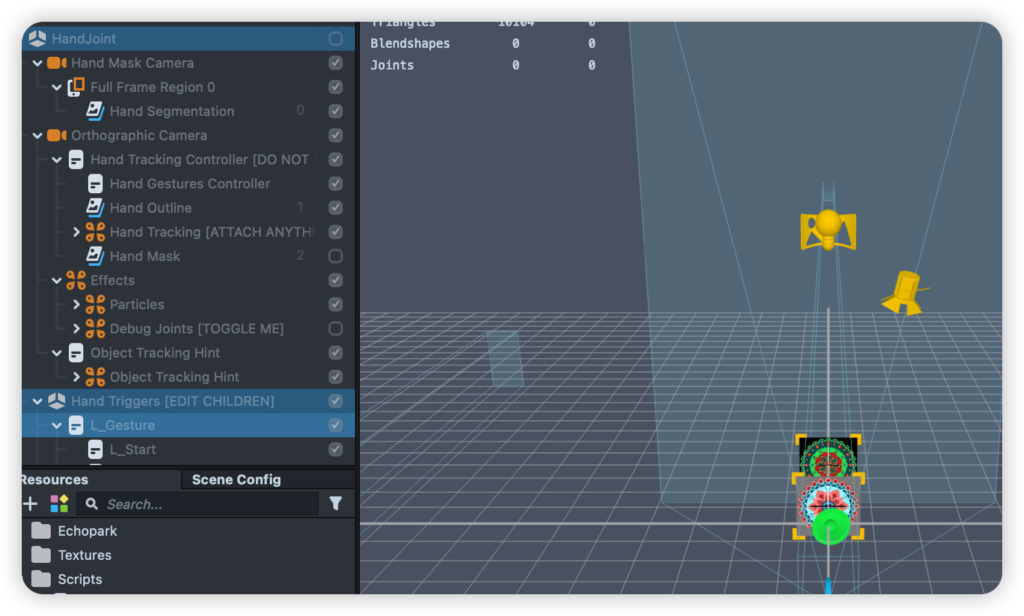

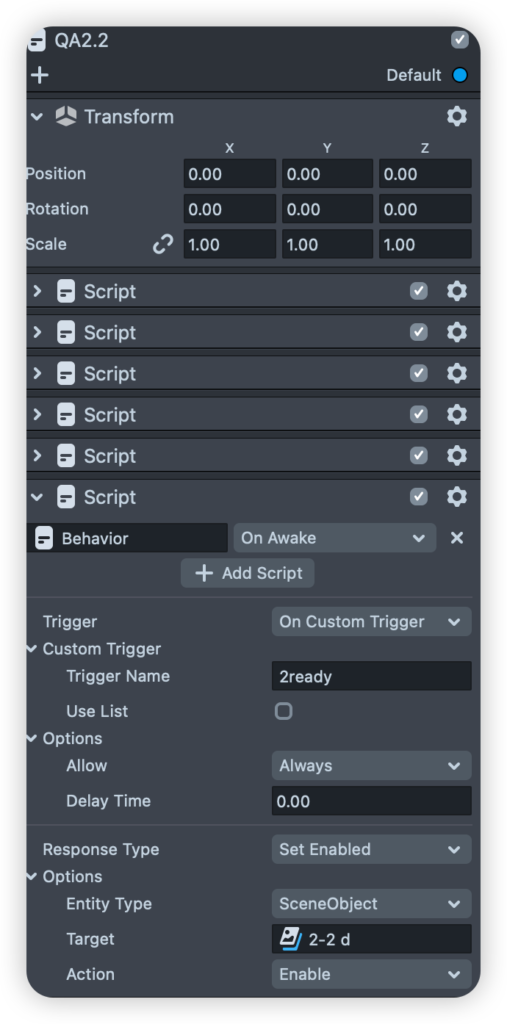

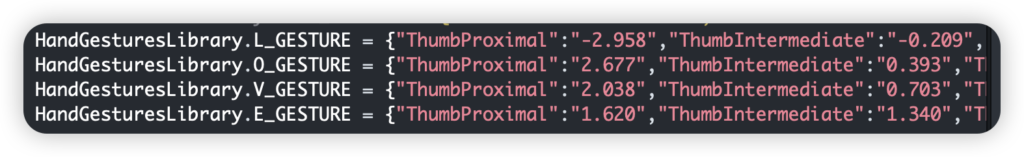

However, since most of my experience has been with Unity and Unreal Engine, and I don’t know much about Javascript, my main job was to integrate art and sound resources made by other students, place them in the editor scene, and then use Behavior script to solve logical problems, such as dialog and option logic, animation, sound effect playback and switch timing, and custom gesture recognition.

Reflection

We at Findy Hunter successfully combined AR technology with environmental education to provide users with a new way to explore the park, although there is still much room for improvement.

This project was a bold attempt for me, and although the results were not as good as I would have expected, I also learned valuable lessons from the team and gained a deeper understanding of AR technology and user experience design.

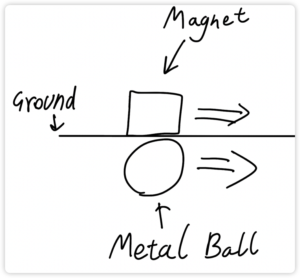

From my point of view, Mixed Reality (MR) is more in accordance with my expectation of this project, adding more interactive content on top of AR, expanding the user experience from the phone screen to the real world instead of just adding a layer of virtual filters to the real world, and the interaction process is necessary and really affects the user.

In terms of user experience, three students from UXE Design program made a detailed plan for user interaction, target experience, and required materials, which I had not experienced before in designing games that focused on mechanics and gameplay, and it benefited me a lot. However, due to our limited communication at this stage, the volume of interactions got out of control and we did not have a good sense of what to expect.nt.

At the beginning of the project design, I imagined this to be an application with AR as the medium and realistic interaction as the core. Users are guided through a fun game to explore the park and learn about real stories that have happened in the park. The target users might be teenagers in the neighborhood of the park who often come to the park and the park is a part of their life, but don’t know how it became what it is today and what stories have happened in the park.

After discussion, however, the team chose to use Lens Studio in order to create a stand-alone application for Snapshot, a platform with a much larger user base, rather than Unity, in order to focus on the main goal of distribution.

Because of the bad choice of platform, we encountered a lot of unexpected problems during the actual production process, such as ignoring the space occupied by Snapshot’s UI when we need to use buttons to interact with it, adding another layer of interactive buttons makes it look messy, and affecting the smoothness of Snapshot when there are too many art resources for the three scenes.

Overall, this project was full of surprises and challenges for me, but it also brought me a lot of help, both in terms of team communication and user experience. Through learning Lens Studio, I have also gained a better understanding of AR technology, which is crucial to my career developme